Bad News, DBAs, We Are All Developers Now

I sometimes joke that I’m a Junior Developer and a Principal Database Administrator, which is why I have a Staff level title. I’m not sure …

Read MoreI recently posted on LinkedIn that I was interviewing for a SQL Server database administrator role for the first time in a long time. I invited folks: “Ask me your favorite interview question!”

Stephen Vakil had a great one: “when should you use SQL Server to store your data?”

For situations where SQL Server is already in use and there’s a relatively low barrier to entry, I think it’s simpler to turn this question around and ask, “When is SQL Server not a good choice for storing your data?”

This post is also available as a podcast episode, and on YouTube.

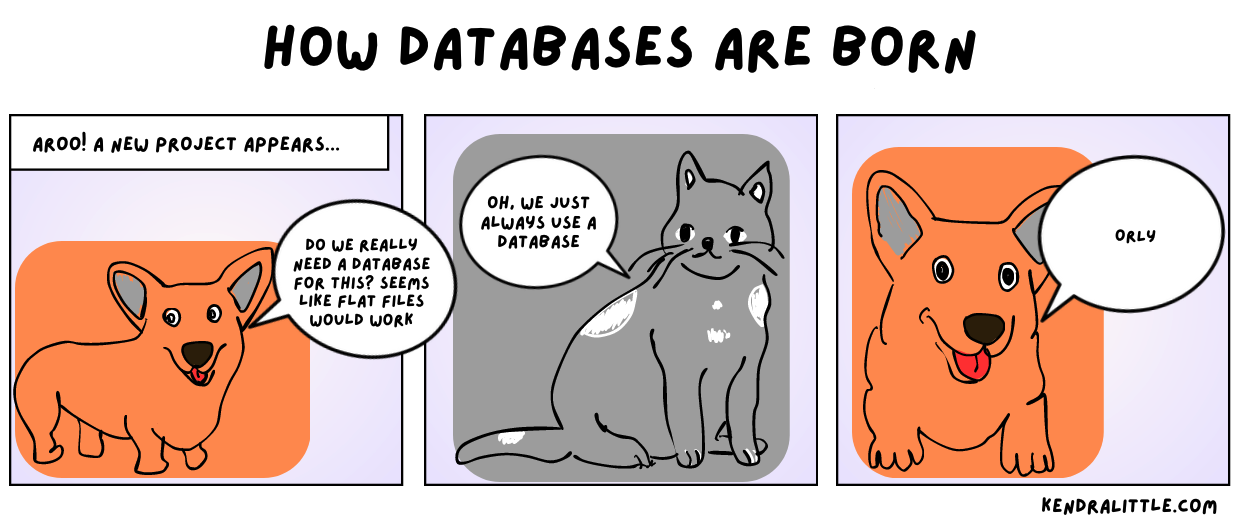

This is going to seem like an obvious question but…. do you actually need a database?

Would flat files work for your purpose? Do you only need a readable cache and very low latency? Would an API interface be better? (And maybe what’s behind it is a database, or maybe it’s not.)

It’s very easy to get comfortable with a database that the team is quite familiar with, and to default to using it. Especially because of this, it’s a good practice to as regularly: do we need this in a database? Is there a less expensive and easier to manage option, or an architecture that would work better in the long run?

SQL Server’s primary strength is being a reliable, high performing relational database that handles structured data well. This means it’s great for situations where:

This is the core scenario where the SQL Server database engine and query optimization shine.

While SQL Server can deal with unstructured data, if this is the primary use case for the application then a type of database that is designed to optimize storing and accessing unstructured data is worth considering. Databases that specialize in handling unstructured data include MongoDB and Couchbase, but the right choice will vary by your use case, your deployment scenario (cloud/on-prem), and other variables considered below.

If you are not interested in normalizing data in SQL Server because you wish to use the database primarily for analytics, you might also want to consider other databases…

SQL Server is very good at transactional processing, with lots of inserts, updates, and deletes. SQL Server has multiple options on how to implement ACID compliant transactions when you have a workload that consists of concurrent reads and writes. Here’s a review of those ACID properties:

If your primary use case for SQL Server is an OLTP workload, but you’d also like to do some reporting against those tables, that can sometimes work well in SQL Server: it even has a columnar storage option that can help with large scans.

However, if your primary or only use case for a database is reporting or analytics, you might not want to use “classic” SQL Server. Depending on your use case, you might consider a database or warehousing approach designed purely for analytics. There are many different options for analytics workloads, but here are three that come up a lot these days:

Technically, SQL Server has an option for having multiple writeable copies of a database: peer-to-peer replication.

But I haven’t heard of anyone deploying a new peer-to-peer setup in many years. It’s not a popular choice, because it tends to be pretty fragile: you need to design the schema carefully to avoid having a lot of conflicts if the same rows are updated on different nodes. Microsoft recommends:

To avoid potential data inconsistency, make sure that you avoid conflicts in a peer-to-peer topology, even with conflict detection enabled. To ensure that write operations for a particular row are performed at only one node, applications that access and change data must partition insert, update, and delete operations. This partitioning ensures that modifications to a given row originating at one node are synchronized with all other nodes in the topology before the row is modified by a different node.

Essentially, some very specific application designs may work with peer to peer replication, but even then, living with P2P and troubleshooting it in real-world scenarios is quite complex and time consuming. I would generally look at other options first if I need to scale out writes for a single database.

Other options for SQL Server, including Azure SQL variations of SQL Server, allow only one writable node for a database.

This is just a terminology thing– “SQL Server” generally refers to the “boxed software” version of SQL Server that you either install yourself, or run pre-installed on a VM in an implementation like SQL Server on Azure VM.

SQL Server can require quite a lot of care and feeding: backups, maintenance, configuration, upgrades/patching, and monitoring. By using a Platform as a Service (PaaS) option, you can limit some of this work.

For these cases, a hosted database option can be the right answer. This might be something very similar to SQL Server, like Azure SQL Database, Azure SQL Managed Instance, or Amazon RDS for SQL Server. Or a hosted version of another relational database, based on your preference, might fit, depending on the requirements of the situation .

SQL Server licensing gets expensive quickly. Sometimes this leads to people just throwing things on the SQL Server: hey, we paid a lot for these licenses, might as well use it!

And while that’s fine in many cases, eventually adding on all those use cases will likely lead to spending more on SQL Server licensing. Cost is generally a consideration for any project, and if you need a cheap solution then SQL Server generally shouldn’t be your first choice. (Unless, of course, you work at a place that uses a lot of Oracle. In that case, the SQL Server will look cheap.)

Copyright (c) 2025, Catalyze SQL, LLC; all rights reserved. Opinions expressed on this site are solely those of Kendra Little of Catalyze SQL, LLC. Content policy: Short excerpts of blog posts (3 sentences) may be republished, but longer excerpts and artwork cannot be shared without explicit permission.