Bad News, DBAs, We Are All Developers Now

I sometimes joke that I’m a Junior Developer and a Principal Database Administrator, which is why I have a Staff level title. I’m not sure …

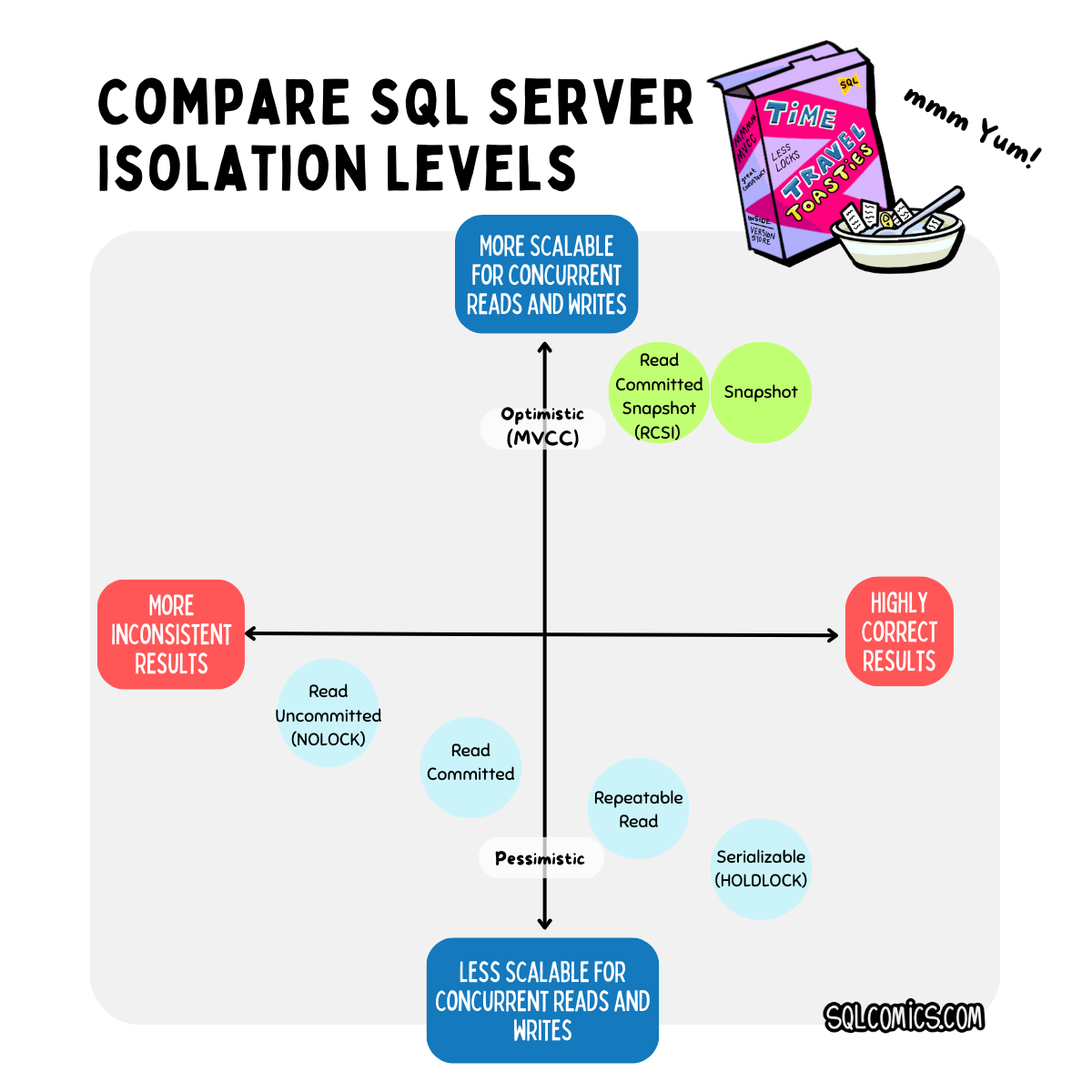

Read MoreI shared an image on social media this week that describes how I feel about isolation levels in SQL Server (and its various flavors): the more concurrent sessions you have in a database reading and writing data at the same time, the more attractive it is to use version-based optimistic locking for scalability reasons.

There are two isolation levels in SQL Server that use optimistic locking for disk-based tables:

Many folks get pretty nervous about RCSI when they learn that certain timing effects can happen with data modifications that don’t happen under Read Committed. The irony is that RCSI does solve many OTHER timing risks in Read Committed, and overall is more consistent, so sticking with the pessimistic implementation of Read Committed is not a great solution, either.

Like many folks, I chat with various AI assistants these days. Although their claims require fact-checking, they help me understand concepts I’ve had a hard time getting my head around, as well as improve my wording on concepts I already DO understand. And unlike some forums, AI doesn’t chastise me that my question’s already been asked by user GoblinFeet1000 and will reword things without judgement until I catch on. (Aside: one example is the Halloween problem.)

So I gave GitHub CoPilot the prompt: “what specific kinds of lost updates can happen under read committed snapshot isolation level in sql server”

Here’s my improved summary of RCSI’s timing issues after its help:

Under RCSI, readers generally don’t block writers, and writers don’t block readers. This means it allows transactions to read data that has been modified by other transactions but not yet committed. If one transaction reads data that another transaction has modified but not yet committed, and then attempts to update that data, the second transaction’s changes may be lost when the first transaction commits. This doesn’t happen with every type of modification, but some query plans for modification allow this risk.

But even re-worded, that explanation can make people pretty nervous to enable RCSI. (Note: if you’d like a technical post on timing issues under RCSI, Paul White has written a great one.)

Read Committed has plenty of its own timing issues, it’s just that most people figure they already have it and nobody is complaining, so it’s “comfortable” as the status quo.

Under Read Committed:

If good accuracy is needed for data in a highly concurrent situation, it’s not a great solution to stick with this!

According to ChatGPT, that would be like:

One essential thing is to recognize that protecting yourself against data inaccuracies with timing conditions is a requirement for using databases, no matter what isolation level you use.

For your data modifications, you can protect them from the risk of edge conditions by examining them and identifying what isolation level they actually need. Your queries that do modifications perhaps should run under repeatable read, serializable, or snapshot isolation level to avoid race conditions, depending on your patterns and your requirements. This can co-exist with RCSI– it doesn’t replace the need to sometimes use a different isolation level, it only changes how Read Committed is implemented.

If both scalability and data correctness matter, this is worth your time (even if you stick with pessimistic Read Committed as your default). Start by identifying the most frequent and most critical transactions that do modifications for your application, and identify what level of protection they need.

And if you decide that the risk of a few edge conditions is tolerable after looking at this, you might just enable RCSI like Michael J Swart’s team eventually decided to do.

Copyright (c) 2025, Catalyze SQL, LLC; all rights reserved. Opinions expressed on this site are solely those of Kendra Little of Catalyze SQL, LLC. Content policy: Short excerpts of blog posts (3 sentences) may be republished, but longer excerpts and artwork cannot be shared without explicit permission.