Bad News, DBAs, We Are All Developers Now

I sometimes joke that I’m a Junior Developer and a Principal Database Administrator, which is why I have a Staff level title. I’m not sure …

Read MoreIf you use readable secondaries in Availability Groups or Read-Scale out instances in Azure SQL Managed Instance, you may have queries fail repeatedly if there is a glitch and statistics are not successfully ‘refreshed’ on the secondary replica. Those queries may keep failing until you manually intervene.

It’s unclear if Microsoft will ever fix this. There is a well established support deflection article which documents the issue and provides ‘workarounds’.

As a user on StackExchange wrote in September of 2022, “This is an outstanding bug in SQL Server which has been ignored for quite some time, unfortunately.” Based on a link provided in that post, it appears feedback was provided for this as far back as SQL Server 2012, when AGs were introduced.

More than 12 years later, how viable are readable secondaries / scale-out read servers for production use if it’s acceptable for queries to fail at an undocumented/unknown rate?

It’s not really clear to me what causes this error. Microsoft’s support deflection article says:

This issue occurs because an active transaction prevents the cache invalidation log record from accessing and refreshing the statistics on the secondary replica.

When I ran into this issue, there were no long-running transactions on the primary replica or any secondary replicas. Potentially this had happened at some point in the past and the process that refreshes statistics gave up, or was a victim in a deadlock and didn’t retry? All I have is guesses.

If this issue happens to you, the article presents three options for workarounds:

I don’t love this step as a fix for a production database server. It’s fine on some workloads, but there are plenty where it causes performance issues. The article also indicates that this is not as “permanent” an option as option 3, too.

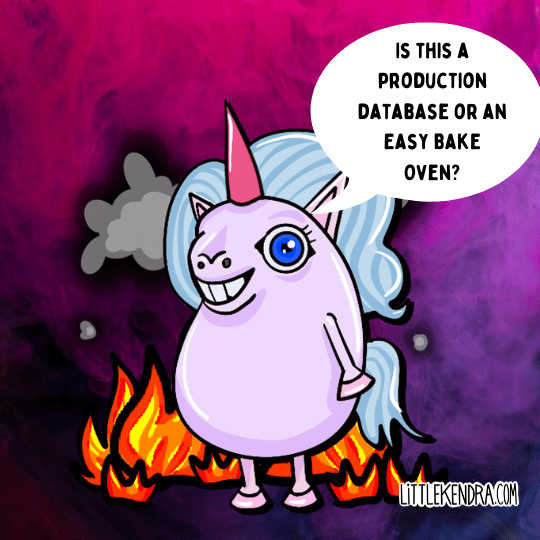

OK, so this option is to take a brief outage on the server? Really? How is this option 2? Is this a production database or an Easy Bake Oven?

I guess I choose this as the “least worst” option. For undisclosed reasons, this is labeled a “more permanent workaround.”

Do I want to automate proactively finding this issue if it happens and repairing it? No.

I don’t think Microsoft understands how often this happens.

First, the article linked above is intended to drive down support requests. This saves money for Microsoft, and frankly users appreciate it because none of us want to work through support tickets, however it means that Microsoft doesn’t get signals about how many people are hitting the problem.

I can say that this does still happen with the latest versions of SQL Server and that it occurs on Azure SQL Managed Instance, based on what I have seen and what I see others reporting in blogs and forum posts. The support article also references Azure SQL Database, so presumably it happens there as well.

In Microsoft’s support deflection article, the full sample error is:

Msg 2767, Level 16, State 1, Procedure sp_table_statistics2_rowset, Line

[Batch Start Line ] Could not locate statistics ‘ ’ in the system catalogs.

The sp_table_statistics2_rowset system procedure is not fully documented. This procedure is run automatically when you use a linked server to run a query, but the article doesn’t mention linked servers at all or say that the bug only occurs when using a linked server.

Since the article explains that a statistic is not present/not usable on the secondary replica itself, presumably this can impact queries running without linked servers, but it’s not clear to me if they fail or if they just ignore the statistic (and maybe get a worse plan). I have seen that a query may fail due to this when run with a linked server, but the same query may run successfully if run against the replica correctly – more on that in an upcoming post.

Given how long this issue has been around and the level of customer impact it seems to have (queries failing repeatedly with no warning until an administrator intervenes), I think this is an important question worth asking.

I would love to hear more from Microsoft about this in their blog posts or support articles:

As it is, it just seems like another reason not to use readable secondaries.

Copyright (c) 2025, Catalyze SQL, LLC; all rights reserved. Opinions expressed on this site are solely those of Kendra Little of Catalyze SQL, LLC. Content policy: Short excerpts of blog posts (3 sentences) may be republished, but longer excerpts and artwork cannot be shared without explicit permission.