Bad News, DBAs, We Are All Developers Now

I sometimes joke that I’m a Junior Developer and a Principal Database Administrator, which is why I have a Staff level title. I’m not sure …

Read MoreThis post demonstrates two related bugs with plan forcing in Query Store which increase the likelihood of slower query execution and application timeouts in SQL Server environments.

These bugs are most likely to impact you if:

The General Purpose tier of Azure SQL Managed Instance and Azure SQL Database feature both slow storage and Automatic Plan Correction enabled by default. So, weirdly enough, your risks of suffering from this problem are high if you are an Azure SQL customer.

Thanks to Erik Darling for his help in diagnosing and reproducing these issues– and his ‘slow compiler’ query used in this post was incredibly helpful to isolate and narrow down these problems.

If a query has a forced plan in query store and the query is cancelled by the calling user/application while the query is still compiling (which does still happen for queries with forced plans), it will:

query_store_plan_forcing_failed event without a clear error message (Error: 316, Severity:-1).GENERAL_FAILURE.Following this, the query will have slower or potentially infinite compile time whenever it needs to recompile.

GENERAL_FAILURE state it takes more than an hour to compile. (I got tired of waiting and cancelled it at that point.)General failure is about right.

This demo does some things that you wouldn’t want to do on a production system, use a test system instead. I reproduced this issue on Microsoft SQL Server 2022 (RTM-CU7) (KB5028743) - 16.0.4065.3 (X64)

First, create a database with Query Store enabled:

USE master;

GO

IF EXISTS

(

SELECT

1/0

FROM sys.databases AS d

WHERE d.name = N'QueryStoreTest'

)

BEGIN

ALTER DATABASE QueryStoreTest SET SINGLE_USER WITH ROLLBACK IMMEDIATE;

DROP DATABASE QueryStoreTest;

END;

CREATE DATABASE QueryStoreTest;

GO

ALTER DATABASE QueryStoreTest SET QUERY_STORE = ON;

GO

/* These options for testing, NOT prod */

ALTER DATABASE QueryStoreTest SET QUERY_STORE

(

OPERATION_MODE = READ_WRITE,

MAX_STORAGE_SIZE_MB = 1000,

INTERVAL_LENGTH_MINUTES = 5,

SIZE_BASED_CLEANUP_MODE = AUTO,

WAIT_STATS_CAPTURE_MODE = ON

);

GO

USE QueryStoreTest;

GO

ALTER DATABASE CURRENT SET COMPATIBILITY_LEVEL = 160;

GOStart this query in one session, let’s call it Session A. Thanks to Erik Darling for this portable example of a slow-compiling query.

SET STATISTICS TIME, IO ON;

GO

WITH val as

(SELECT * FROM (VALUES ( 1 , 2), ( 1 , 2)) as v(i,j) ),

p0 AS (SELECT pt.* FROM val AS pt),

p1 AS (SELECT pp.* FROM p0 AS p CROSS JOIN p0 AS pp),

p2 AS (SELECT pp.* FROM p1 AS p CROSS JOIN p1 AS pp),

p3 AS (SELECT pp.* FROM p2 AS p CROSS JOIN p2 AS pp),

p4 AS (SELECT pp.* FROM p3 AS p CROSS JOIN p3 AS pp),

p5 AS (SELECT pp.* FROM p4 AS p CROSS JOIN p4 AS pp),

p6 AS (SELECT pp.* FROM p5 AS p CROSS JOIN p5 AS pp),

p7 AS (SELECT pp.* FROM p6 AS p CROSS JOIN p6 AS pp),

p8 AS (SELECT pp.* FROM p7 AS p CROSS JOIN p7 AS pp),

p9 AS (SELECT pp.* FROM p8 AS p CROSS JOIN p8 AS pp)

SELECT c = COUNT_BIG(*)FROM p9 AS p;

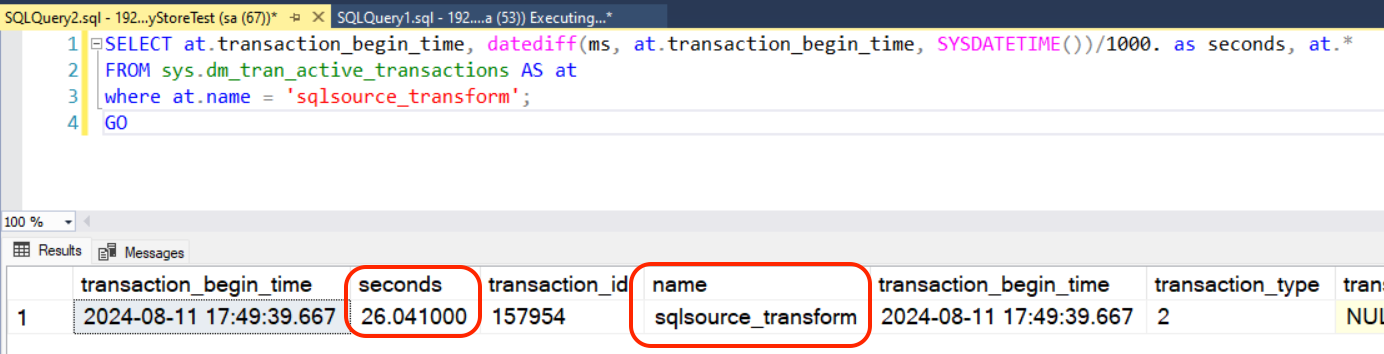

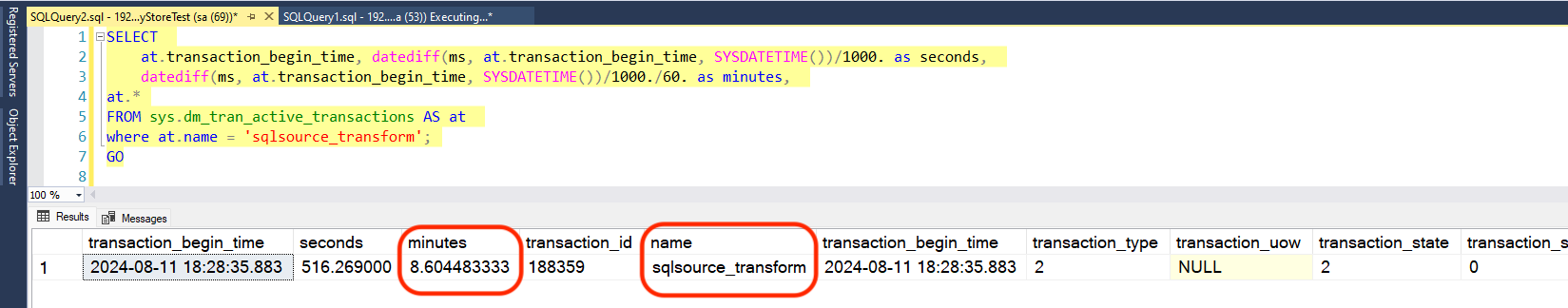

GOMonitor how long it takes to compile by running this query in another session, let’s call it Session B.. I’m currently seeing around 28 seconds on my laptop. The first query doesn’t need to complete execution, it only needs to complete compiling. Cancel the query after it finishes compiling– you’ll know that happens when the query no longer shows up as a row in the query in Session B..

SELECT

transaction_begin_time = at.transaction_begin_time,

seconds = DATEDIFF(SECOND, at.transaction_begin_time, SYSDATETIME()),

at.*

FROM sys.dm_tran_active_transactions AS at

WHERE at.name = N'sqlsource_transform';

GOThis images shows Session B when the query from Session A had been compiling for 26 seconds:

Shortly after this point, it finishes compiling and no longer shows up as a result of this query.

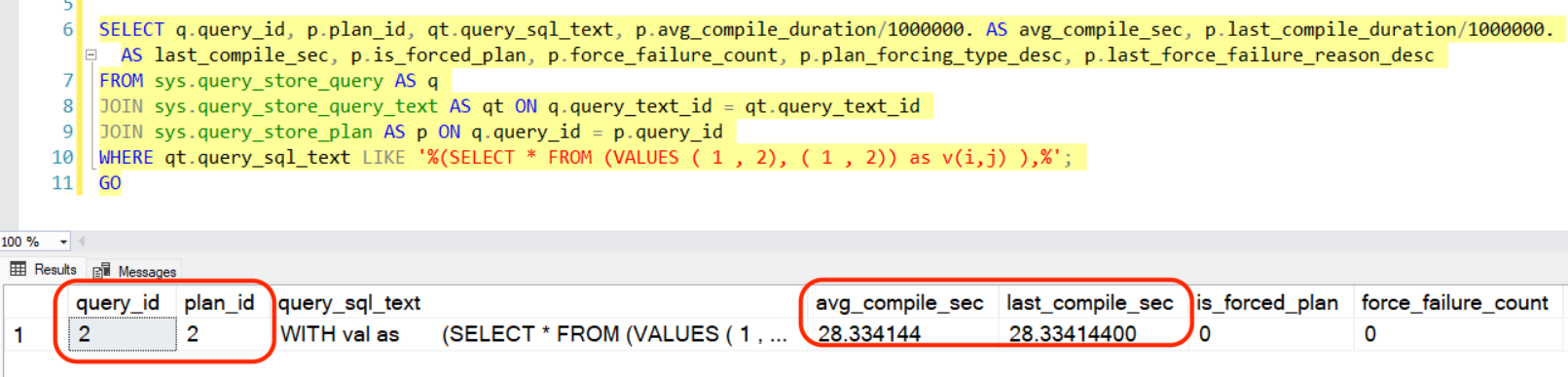

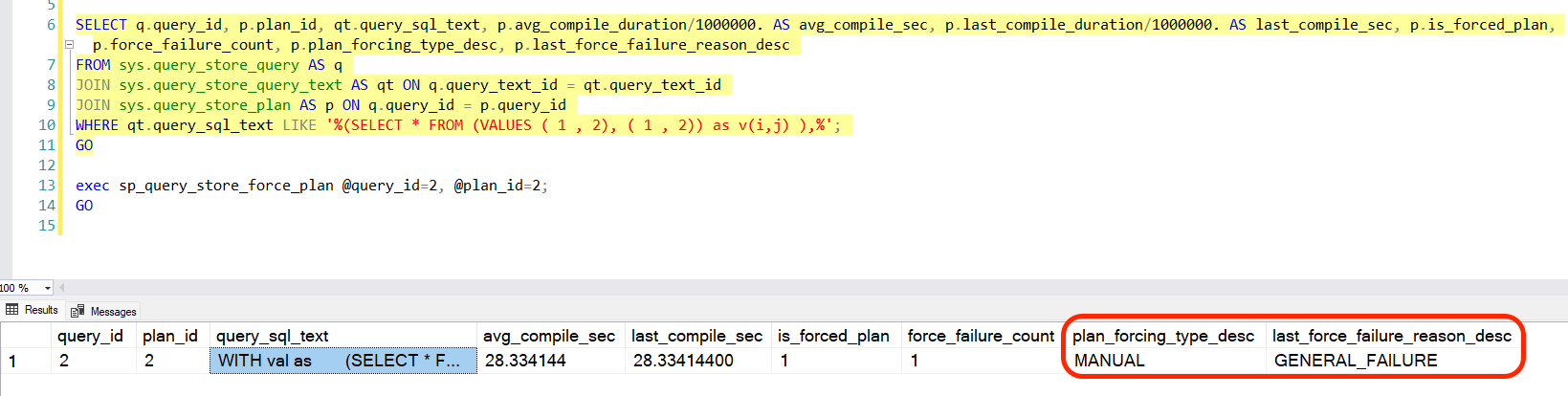

In session B, run this query to find the query_id and plan_id for the long compiler. (I’ll refer to this as the ‘Query Store Status Query’ in following steps.)

SELECT

query_id = q.query_id,

plan_id = p.plan_id,

query_sql_text = qt.query_sql_text,

avg_compile_sec = p.avg_compile_duration / 1000000.,

last_compile_sec = p.last_compile_duration / 1000000.,

is_forced_plan = p.is_forced_plan,

force_failure_count = p.force_failure_count,

plan_forcing_type_desc = p.plan_forcing_type_desc,

last_force_failure_reason_desc = p.last_force_failure_reason_desc

FROM sys.query_store_query AS q

JOIN sys.query_store_query_text AS qt

ON q.query_text_id = qt.query_text_id

JOIN sys.query_store_plan AS p

ON q.query_id = p.query_id

WHERE qt.query_sql_text LIKE N'%(SELECT * FROM (VALUES ( 1 , 2), ( 1 , 2)) AS v(i,j) ),%';

GOIn my case, this is query_id = 2 and plan_id = 2.

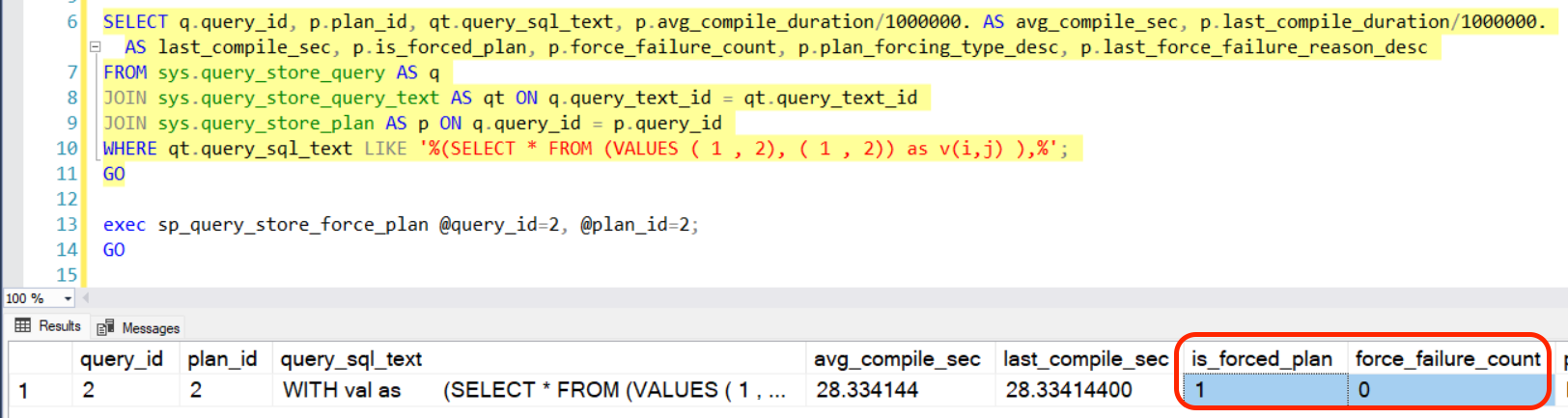

Run the following query in Session B to force the plan. Specify the correct query id and plan id that you identified in the query above:

EXECUTE sys.sp_query_store_force_plan

@query_id = 2,

@plan_id = 2;

GOAfter forcing the plan, rerun the Query Store Status Query and you should see that the plan has been forced. There haven’t been any failures so far.

If you would like, you can now create and start an Extended Events session looking for the query_store_plan_forcing_failed event. It will fire when you do this next step.

We need to do something to cause the query to recompile. For real world queries, this might be an automatic statistics update, a failover, or anything else that triggers a fresh compilation.

After doing this sequence of events, rerun the Query Store Metadata Query to review the status now:

forced_failure_count = 1

last_force_failure_reason_desc = GENERAL_FAILURE

Monitor its compilation time in Session B using sys.dm_tran_active_transactions to look for ‘sqlsource_transform` as before.

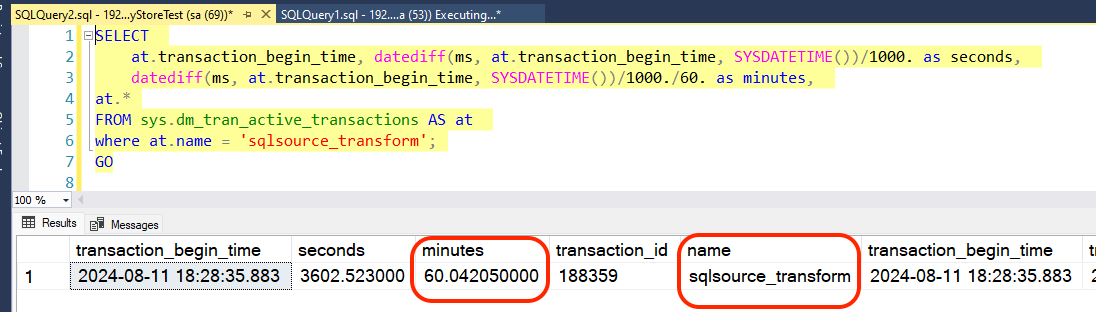

It will compile for a very long time – more than 1 hour on my laptop.

Here it is at 8 minutes, still compiling:

And here it is just past an hour, still compiling:

Reminder: these extra-long compile times will not be written to Query Store if the query is cancelled before compilation finishes.

This invisibility in Query Store makes identifying and diagnosing this issue even tricker than normal. Any external monitoring you have may report queries as having run and timed out over and over again, but when you look in Query Store, you don’t see the query even executing.

It feels to me like there are multiple bugs in here that Microsoft should fix:

GENERAL_FAILURE status in Query Store when a plan is forced. Applications can have timeouts set at all sorts of things.GENERAL_FAILURE as a plan forcing status should not take longer to compile.And I do not think Automatic Plan Correction should be enabled by default in Azure SQL Managed Instance or Azure SQL Database.

I have reported these issues to Microsoft via a support ticket.

If you ever force plans— and especially if you use the Automatic Plan Correction feature, which frequently forces plans– you should create a SQL Server Agent Job that regularly looks for failed forced plans with a status of GENERAL_FAILURE and unforce them.

Consider turning off Automatic Plan Correction if you have it enabled.

Copyright (c) 2025, Catalyze SQL, LLC; all rights reserved. Opinions expressed on this site are solely those of Kendra Little of Catalyze SQL, LLC. Content policy: Short excerpts of blog posts (3 sentences) may be republished, but longer excerpts and artwork cannot be shared without explicit permission.